The Gold Foil Experiment and The 250-Million-Ton Pea: The Composition of the Atom

February 23, 2013 Leave a comment

This Atom Is Not To Scale

In a recent post about isotopic abundance, I used a prototypical image of a lithium atom to illustrate the basic structure of an atom. However, the image was deliberately not drawn to scale to make the protons, neutrons, and electrons visible. Let’s look at the basic composition of the atom to see why, and we owe this understanding to Ernest Rutherford. First, let’s give some historical background about what motivated Rutherford to conduct this experiment; we first turn to the Plum Pudding Model by J.J. Thomson.

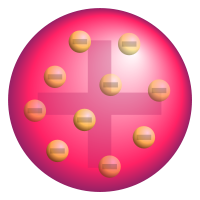

The Plum Pudding Model

Before 1911, the dominant theory of atomic composition was J.J. Thomson‘s “plum pudding” model. Thomson hypothesized that an atom consisted of electrons as negatively charged particles (the “plums”) “floating” in a “pudding” of positive charge.

Plum Pudding Model of the Atom

Source: Wikimedia Commons

Recent Comments