Applied Statistics Lesson of the Day – Polynomial Regression is Actually Just Linear Regression

June 19, 2014 Leave a comment

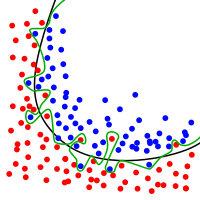

Continuing from my previous Statistics Lesson of the Day on what “linear” really means in “linear regression”, I want to highlight a common example involving this nomenclature that can mislead non-statisticians. Polynomial regression is a commonly used multiple regression technique; it models the systematic component of the regression model as a -order polynomial relationship between the response variable

and the explanatory variable

.

However, this model is still a linear regression model, because the response variable is still a linear combination of the regression coefficients. The regression coefficients would still be estimated using linear algebra through the method of least squares.

Remember: the “linear” in linear regression refers to the linearity between the response variable and the regression coefficients, NOT between the response variable and the explanatory variable(s).

Recent Comments